Multiple Partitions Memory

Management:

This method can be implemented in three ways. These are:

- Fixed equal multiple partitions

memory management

- Fixed variable multiple

partitions memory management

- Dynamic multiple partitions

memory management

A. Fixed Equal

Multiple Partitions Memory Management Scheme:

In this

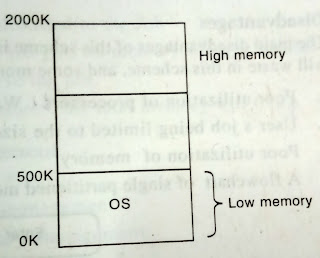

memory management scheme, the OS occupies lower memory, and the rest of main

memory is available for user space. The user space is divided into fixed

partition. The partition sizes are depended on operating system. For ex: if the

total main memory size is 6 MB, 1MB occupied by the operating system. The

remaining 5 MB is partitioned into 5 equal partitions (5x1 MB). J1, J2, J3, J4,

J5 are the five jobs to be loaded into main memory. Their sizes are:

|

Job

|

Size

|

|

J1

|

450 KB

|

|

J2

|

1000 KB

|

|

J3

|

1024 KB

|

|

J4

|

1500 KB

|

|

J5

|

500 KB

|

Consider the

following fig. for better understanding.

Fig: Main memory allocation

Internal and External fragmentation:

Job J1 is

loaded into partition 1. The maximum size of the partition 1 is 1024 KB; the

size of J1 is 450 KB. So, 1024 - 453=574 KB space is wasted. This wasted memory is said to be ‘internal fragmentation’.

But, there

is no enough memory to load job J4 because; the size of job J4 is greater than

all the partitions. So, the entire partition i.e. partition 5 is wasted. This

wasted memory is said to be ‘external

fragmentation’.

Therefore,

total internal fragmentation = (1024 - 450) + (1024 - 1000) + (1024 - 500)

= 574 + 24 +

524

= 1122 KB

Therefore,

the external fragmentation for this scheme is = 1024 KB

A partition of main

memory is wasted within a partition is said to be ‘internal

fragmentation’ and the wastage of

an entire partition is said to be ‘external

fragmentation’.

Advantages:

- This scheme supports

multiprogramming.

- Efficient utilization of the

processor and IO devices.

- It requires no special costly

hardware.

- Simple and easy to implement.

Disadvantages:

- This scheme suffers from

internal as well as external fragmentation.

- The single free area may not be

large enough for a partition.

- It does require more memory than

a single partition method.

- A job partition size is limited

to the size of physical memory.

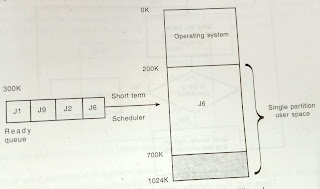

B. Fixed Variable Partition Memory Management Scheme:

In this scheme, the user space of main memory is divided into

number of partitions, but the partition sizes are of different lengths. Operating

system keeps a table indicating which partition of memory are available and

which are occupied. When a process arrives and needs a memory, we search for a

partition large enough for this process. If we find one, allocate the partition

to that process. For ex: assume that we have 4000 KB of main memory available

and operating system occupies 500 KB. The remaining 3500 KB leaves for a user

processes as shown in the following fig:

Fig: Scheduling example

- Out of 5 jobs J2 arrives first (5ms),

the size of J2 is 600 KB. So, search all the partitions from lower memory

to higher memory for a large enough partition. P1 is greater than J2, so load J2 in P1.

- In rest of jobs, J1 is arriving

next (10ms). The size is 825 KB. So, search the enough partition. P4 is

large partition, so load J1 into P4.

- J5 arrives next, the size is 650

KB. There are no large enough partitions to load that job. So, J5 has to

wait until enough partition is available.

- J3 arrives next, the size is

1200 KB. There are no large enough partitions to load it.

- J4 arrives last, the size is 450

KB. Partition P3 is large enough to load it. So, load J4 into P3.

Consider the

following fig. for better understanding.

Fig: Memory allocation

Partitions P1, P2, P5, and P6 are totally free. There are no

processes in these partitions. This wasted memory is said to be external fragmentation. The total

external fragmentation is 1375(400 + 350 + 625).

The total internal

fragmentation is: (700 - 600) + (525 - 450) + (900 - 825) = 250

Now you may have a doubt that the size of J2 is 600 KB and it

is loaded in partition P1 as shown in the above figure. In this case, the

internal fragmentation is 100 KB. But if it is loaded in P6, then the internal

fragmentation is only 25 KB. Why it is loaded in P1? Three algorithms are

available to answer this question. These are first fit; best fit and worst fit

algorithms.

First fit:

·

Allocate

the partition that is big enough.

·

Searching

can start either from lower memory or higher memory.

·

We

can stop searching as soon as we find a free partition that is large enough.

Best fit:

·

Allocate

the smallest partition that is big enough or select a partition which is having

the least internal fragmentation.

Worst fit:

·

Search

the entire partitions and select a partition which is the largest of all or

select a partition which is having the maximum internal fragmentation.

Advantages:

- This scheme supports multi programming.

- Efficient processor utilization and memory utilization

is possible.

- Simple and easy to implement.

Disadvantages:

- This scheme is suffers from internal and external

fragmentation.

- There is a possibility for large external fragmentation.

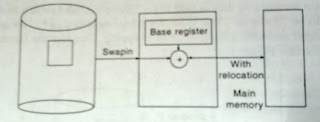

C. Dynamic Partition Memory Management Scheme:

To eliminate some of the problems with fixed partitions, an

approach known as dynamic partitioning was developed. In this method,

partitions are created dynamically, so that each process is loaded into a

partition of exactly the same size of that process. In this scheme, the entire

user space is treated as a “big hole”.

The boundaries of partitions are dynamically changed, and the boundaries are

dependent on the size of the processes. Consider the following example:

Fig: Memory allocation

Job J2 arrives first, so load the J2 into memory. Next J1

arrives and it is loaded into memory. Similarly, J5, J3, and J4 arrive

respectively. Consider the following fig:

Fig: Memory representation

In above fig: (a), (b), (c), (d) jobs J2, J1, J5, and J3 are

loaded. The last job is J4 and its size is 450 KB. But, the available memory is

225 KB. It is not enough to load J4, so the job J4 has to wait until the memory

becomes available. Assume that after some time J5 has finished and it has

released its memory. So the available memory now becomes 225 + 650 = 875 KB. It

is enough to load job J4. Consider the following fig:

Fig: (e) and (f)

Advantages:

- In this scheme partitions are

changed dynamically so that this scheme doesn't suffer from internal

fragmentation.

- Efficient memory and processor

utilization is possible in this scheme.

Disadvantages:

- This scheme suffers from the problem of

external fragmentation.