Round Robin Scheduling (RR):

The time sharing systems uses the Round Robin algorithm. The use of a small time quantum allows Round Robin to provide good response time. It is a preemptive algorithm.

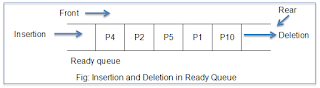

CPU selects the process from the ready queue. To implement RR

scheduling, ready queue is maintained as a FIFO queue. New processes are added

to the tail of the ready queue. The CPU scheduler picks the first process from

the ready queue, set a timer to interrupt after one time quantum and dispatches

the process.

With RR algorithm, the principal design issue is the length

of the time quantum or time slice to be used. If the time quantum is very

short, then short processes will move through the system relatively quickly. It

increases the processing overhead involved in handling the clock interrupt and

performing the scheduling and dispatch function. So, a very short time quantum

should be avoided.

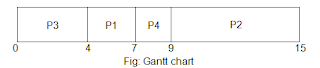

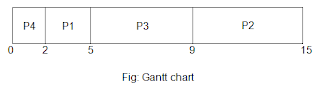

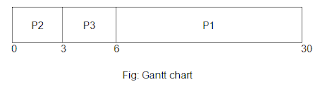

Consider the

set of processes with the burst time in milliseconds. All the processes arrive

at time 0. The Gantt chart, waiting time and so on are as follows:

|

Process |

Burst time |

|

P1 |

3 |

|

P2 |

6 |

|

P3 |

4 |

|

P4 |

2 |

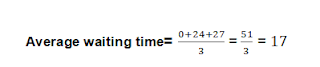

Waiting time:

|

Process |

Waiting time |

|

P1 |

0 + 6 = 6 |

|

P2 |

2 + 5 + 2 = 9 |

|

P3 |

4 + 5 =9 |

|

P4 |

6 = 6 |

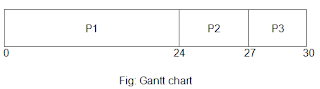

Turnaround time:

It is the sum of burst time + waiting time of

each process.

|

Process |

Turnaround time |

|

P1 |

3 + 6 = 9 |

|

P2 |

6 + 9 = 15 |

|

P3 |

4 + 9 = 13 |

|

P4 |

2 + 6 = 8 |