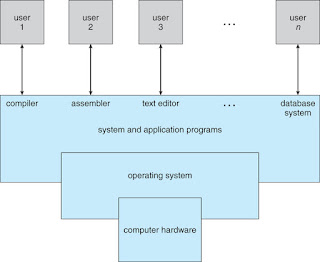

Types of OS are as follows:

Desktop Systems:

The PCs (Personal Computer) appeared in 1970s. At the starting, the CPUs in PCs lacked features that were needed to protect an operating system from user programs.

Initially, PC operating systems were neither multiuser nor multitasking. But, with time the goals of these operating systems have changed; instead of maximizing CPU and peripheral utilization, these systems chosen to maximize user convenience and responsiveness.

The most popular examples of these systems include PCs running Microsoft Windows and the Apple Macintosh.The development in operating systems for mainframes benefited in several ways to the desktop OS. Micro computers were immediately able to adopt some of the technology developed for larger operating systems.

The hardware cost for micro computers are sufficiently low, individuals have sole use of the computer and CPU utilization is no longer a prime concern. As such the design related decisions that were made in operating systems for mainframes seems to be inappropriate for smaller systems.

However, other design decisions still apply. For ex. file protection feature was at first not necessary on a personal machine. But, nowadays these computers are often connected to other computers via. LANs or other internet connections. When other computers and other users can access the files on a PC, file protection becomes a necessary feature for an operating system.

The MS DOS and the Mac OS initially do not have such protection mechanism which has made it easy for malicious programs to destroy data. These programs maybe self-replicating and may spread rapidly via. worm or virus mechanism.

With the new advances in hardware i.e virtual memory and multitasking, there is no need for the entire program to reside in main memory.

Multiprocessor Systems:

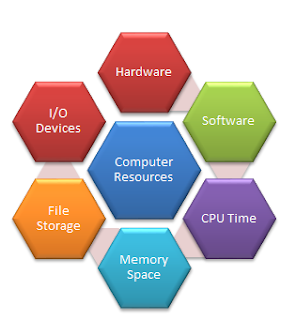

The uni-processor systems are those systems that contain only a single CPU. Multiprocessor systems consists more than one processor in close communication. They share computer bus, system clock and sometimes memory and IO devices. These systems are also known as parallel systems or tightly coupled systems and are growing in importance in today’s world.

· If one of the processor fails, then other processors should retrieve the interrupted process state so that the process continues to execute.

· Context switching should be efficiently supported by the processors.

· These systems support large physical address space as well as virtual address space.

· Provision for IPC (Inter Process Communication) and its implementation in hardware as it becomes easy and efficient.

Advantages:

2. Economy of scale: They are cheaper than multiple single processor systems as they share resources.

3. Increased reliability: The failure of one processor will not halt the system, only slows it down. This ability to continue providing service proportional to the level of surviving hardware is called graceful degradation. Systems that are designed for graceful degradation are called fault tolerant system.

There are two

types of multi processing viz:

A] Symmetric Multiprocessing (SMP):

B] Asymmetric Multiprocessing (ASMP):

· In ASMP, each processor is assigned a specific task.

· A master processor controls the system. The other processors either look to the master for instruction or have predefined tasks.

· This scheme defines a master - slave relationship.

· The master processor schedules and allocates work to the slave processors.

· Each processor has its own memory address space.

Distributed Systems:

A network is a communication path between two or more systems. Distributed systems depend on networking for their functionality. Networks vary by the protocols used, the distances between nodes and the transport media. TCP IP is the most common network protocol although ATM and other protocols are in wide spread use. Likewise operating system support of protocol varies.

Definition: A Distributed OS is a system that looks like an ordinary operating system to its users but it runs on multiple, independent CPUs.

Advantages:

1. Resource sharing: It allows for sharing of both hardware as well as software resources in an effective manner among all the computers and users.

2. Higher reliability: Reliability is the degree of tolerance against errors and component failures. Availability is a key aspect of reliability. Availability can be defined as the time span for which the system is available for the use. We can increase the availability of hard disks by having multiple hard disks located at different sites. Thus, if one of the hard disk fails or become unavailable, the other hard disk can be used. This in turn provides higher reliability.

3. Higher throughput rates with shorter response times.

4. Easier expansion: It is easy to extend power and functionality by adding additional resources.

5. Better price-performance ratio: Nowadays, microprocessors are getting cheaper with increasing computing powers which yields a better price-performance ratio.

Disadvantages:

· They are hard to build.

· No commercial examples of such systems are available.

· Increased overhead of protocols used in communication.

A] Client Server Systems:

- As PCs have become faster, more powerful and cheaper, designers have shifted away from centralized system architecture.

- Terminals connected to centralized systems are now being replaced by PCs.

- The user interface functionality that used to be handled directly by the centralized system is increasingly being handled by the PCs.

- As a result centralized systems today act as server sustains to satisfy a request generated by client systems.

- A server is a typically a high performance machine and clients usually interacts with it through a request-response mechanism.

- A client sends a request to server, for which the server processes the client’s request and generates a response for the client.

- Server systems can be broadly categorized as compute server and file server.

- Compute server systems provide an interface to which clients can send request to perform an action, in response to which they execute the action and sends back results to the client.

- File server systems provide a file system interface where clients can create, update, read and delete files.

B] Peer-to-Peer Systems:

- The computer networks used in these applications consists of a collection of processors that do not share memory or a clock.

- Instead, each processor has its own local memory. The processor communicates with one another through various communication lines such as high speed buses or telephone lines.

- These systems are usually referred to as loosely coupled systems or distributed systems.

- A Network OS is an operating system that provides features such as file sharing across the network and that includes a communications scheme that allows different processors on different computers to exchange messages.

It is a group of computer system connected with a high speed communication link. Each computer system has its own memory and peripheral devices. Clustering is usually performed to provide high availability.

Like parallel systems, clustered systems gather together multiple CPUs to accomplish computational work. But they differ from parallel systems in a way such that they are composed of two or more individual systems coupled together.

The definition of the term cluster is not concrete. The generally accepted definition is that clustered computers share storage and is closely linked via LAN networking. These systems are integrated with both hardware cluster and software cluster. In Hardware clustering, high performance disks are shared. While in Software clustering, the cluster is in the form of unified control of the system.

A layer of cluster software runs on the cluster nodes. Each node can monitor one or more of the others over the LAN. If the monitor machine fails, the monitoring machine can take ownership of its storage, and restart the applications that were running on the failed machine. The failed machine can remain down, but the users and clients of the application would only see a brief interruption of service.

In asymmetric clustering, one machine is in hot standby mode while the other is running the applications. The hot standby host machine monitors the active server. If that server fails, the hot standby host become the active server.

In symmetric clustering,

two or more hosts are running application, and they are monitoring each other. This

mode is more efficient, as it uses all of the available hardware. It requires more

than one application be available to run.

Parallel clusters and clustering over WAN are also available in clustering. Parallel clusters allow multiple hosts to access the same data on the shared storage.Cluster provides all the key advantages of distributed systems. It also provides better reliability than SMP.

Real Time Systems (RTOS):

A real time operating system is one that must react to inputs and responds to them quickly. These systems cannot afford to be late for providing a response to an event.

A real time system has well defined, fixed time constraints. Processing

must be done within the defined constraints, or the system will fail. A real

time system functions correctly only if it returns the correct result within

its time constraints.

Examples of RTOS are the systems that control scientific experiments, medical imaging systems, industrial control systems, certain display systems, automobile engine fuel injection systems, home appliance controllers, weapon systems etc.

Deterministic scheduling algorithms are used in RTOS. They are divided in two categories: Hard RTOS and Soft RTOS.

A hard real time system guarantees that critical task be completed on time. This goal requires that all delays in the system be bounded, from the retrieval of stored data to the time that it takes the operating system to finish any request made of it. Hard real-time systems conflict with the operation of time sharing systems and as such the two systems cannot be mixed.

A Soft RTOS is less restrictive type where the critical real-time task gets priority over other tasks, and retains that priority until it completes. A real time task cannot be kept waiting indefinitely for the kernel to run it. Soft real time is an achievable goal that can be mixed with other type of system.

Soft RTOS have more limited utility then Hard RTOS. They are risky to use for industrial control and robotics as they lack fixed time constraint support. They are useful in many areas like multimedia, virtual reality and advanced scientific projects such as undersea exploration and planetary rover.

Soft RTOS requires two conditions to implement. First, the CPU scheduling must be priority based and second, the dispatch latency must be small. These systems need advanced operating system features that cannot be supported by hard real-time systems.

Handheld Systems:

The systems found in the PDAs (Personal Digital Assistants) such as Palm Pilots are handheld systems. These devices use cellular telephony with network connectivity such as the internet.

The developers of handheld systems face many challenges, most of which are due to the limited size of such devices. Due to this limited size, most handheld devices have a small amount of memory; include slow processors and features small display screens. The typical memory of such devices ranges from 512 KB - 8 MB. As a result the operating system and applications must manage memory efficiently. This includes returning all allocated memory back to the memory manager once the memory is no longer being used.

The speed of the processor used in the device is a second issue of concern to developers of handheld devices. Faster processor requires more power. To include a faster processor in a handheld device, a larger battery is required which needs to be replaced or recharged more frequently.

To minimize the size, smaller, slower processor which consumes less power is used. Also the screen display of these devices is small usually not more than 3 inches square. So tasks such as reading email or browsing the web pages must be condensed on these smaller displays. One approach for displaying the content in web pages is web clipping where only a small subset of a web page is delivered and displayed on the handheld device.

Wireless technology and Bluetooth allows remote access to email and web browsing. Limitations in the functionality of PDA are balanced by their convenience and portability. Their use continues to expand and other options like cameras and MP3 players expand their utility.