Computer System Operation:

The above figure shows a modern computer system. It consists of one or more CPUs and a number

of device controllers connected through a common bus that provides access to

shared memory.

The CPU and the device controllers can execute concurrently. But as such they compete for memory cycles. To ensure orderly access to shared memory, a memory controller is provided whose function is to synchronize access to the memory.

Storage Structure:

RAM or the main memory is the only large storage area that the processor can access directly. It is commonly implemented in a semiconductor technology called Dynamic Random Access Memory (DRAM), which forms an array of memory words. Each word has its own address. The following figure shows storage device hierarchy:

The programs and data cannot reside in main memory

permanently because of the following two reasons:

1.

Main memory is usually too small to store all the

needed programs and data permanently.

2.

Main memory is a volatile storage device that

loses its contents when power is turned off. So, most computer systems provide

secondary storage as an extension to main memory. The main requirement for secondary storage is

that it should be able to hold large quantities of data permanently.

IO Structure:

By storage we

mean one of the storage devices in a computer system. A large portion of OS code is dedicated for

managing IO because:

·

it

is important for the reliability and;

·

performance

of a system.

The computer does not have direct random access to any byte

at any time on the disk - the magnetic disk in the drive are rotating and

magnetic heads move in and out in order to access any part of the surface area

on the disk that holds data. Access

usually involves a disc rotation delay and also a head positioning delay.

A computer system consists of CPUs and multiple device

controllers, connected through a common bus.

Each device controller is in charge of a specific type of device. Depending

on the controller, there may be more than one attached device. The device

controller is responsible for moving the data between the peripheral devices

that it controls and its local buffer storage.

Operating systems have a device driver for each device

controller. Device driver is software that communicates with controller. This device driver understands the device

controller and presents a uniform interface to the device to the rest of the

OS. Most of the device drivers run in

kernel mode.

An IO operation is

performed using the following steps:

1.

The device driver loads the appropriate registers

within the device controller.

2.

The device controller examines the contents of

these registers to determine what action to take.

3.

The controller starts the transfer of data

from device to its local buffer. Once

the transfer of data is complete, the device controller informs the device

driver via an interrupt that it has finished its operation.

4.

The device driver then returns control to the

OS, possibly returning the data or a pointer to the data if the operation was a

read.

IO requests can be handled in two ways viz.

synchronously or asynchronously.

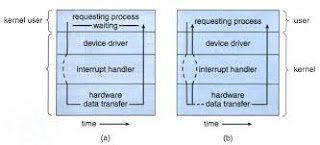

1. Synchronous IO:

A program makes the appropriate OS call, as the CPU is now executing OS code, the original program’s execution is halted i.e it waits. If the CPU always waits for IO completion, at most one IO request is outstanding at a time. So, whenever an IO interrupt occurs, the OS knows exactly which device is interrupting. However, this approach excludes concurrent IO operations to several devices and also excludes the possibility of overlapping useful computation with IO.

2. Asynchronous IO:

A better alternative is to start the IO and then continue processing other operating system or user program code. We also need to be able to keep track of many IO requests at the same time. For this purpose the OS uses a table containing an entry for each IO device: the device status table. Each table entry indicates the device type, address, and state (not functioning, idle or busy).

Since it is possible for other

processes to issue request to the same device, the OS will also maintain a wait queue - a list of waiting request

for each IO device. An IO device interrupts when it needs service. When an

interrupt occurs, the operating system first determines which IO device caused

the interrupt. It then indexes into the device table to determine the status of

that device, and modifies the table entry to reflect the occurrence of the interrupt.

The main advantage of asynchronous IO

is increased system efficiency. While IO

is taking place, the CPU can be used for a processing or starting IO to other

devices. Because IO can be slow compared to processor speed, the system makes

efficient use of its facilities.

No comments:

Post a Comment